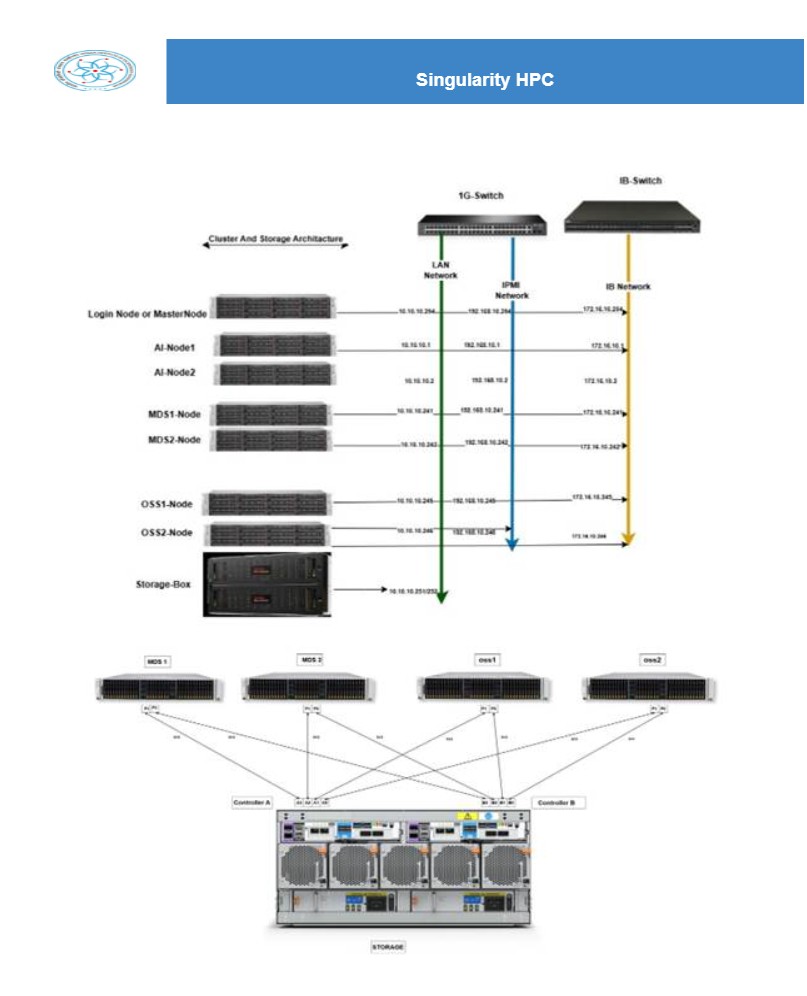

Singularity LAYOUT

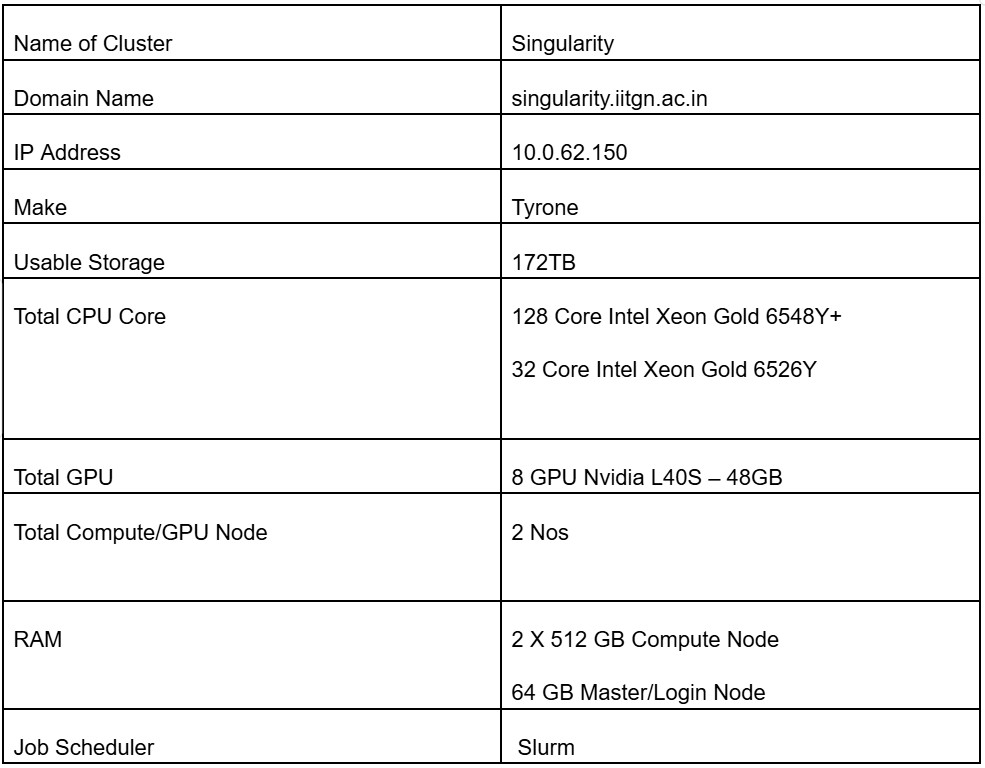

Singularity Details

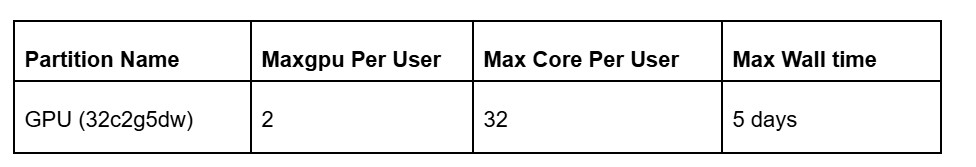

Singularity Queuing System

Please 1) Google Form Request (Click on Apply Here Menu in this webpage) and 2) send an email to raviraj.s@iitgn.ac.in with a copy to your supervisor. Also please do let us know the duration of the account required and the list of software which you wish to run.

The quota for each user is 500 GB in home directory.

The SLURM scheduler will automatically find the required number of processing cores from nodes (even if a node is partially used). Please do not explicitly specify the node number/number of nodes in the script. A priority based queueing system is implemented so that all users get a fair share of available resources. The priority will be decided on multiple factors including job size, queue priority, past and present usage, time spent on queue etc.

It is strongly recommended that users backup their files-folders periodically, as support team will not be having a mechanism to backup users’ data. An automatic email will be sent to the Supercomputer user community once 75 % of the home is used. Another automatic email will be sent to the users once 85% of the home space is used. The deletion of the files by the administrator will commence within 24 hours of the second email. Deletion of files in home directory will be automatically done in 21 days after the last time stamp/update.

Users are strictly NOT ALLOWED to run any jobs on the Master Nodes or any other node in an interactive manner. Users must run jobs only using the scripts through the job scheduler.